Manufacturing Communities with Imagined Consent: Part I

Trust is a primitive of the societal production function.

0.

And, you know, there’s no such thing as society. There are individual men and women and there are families.

Or at least, that’s what Maggie told us in 1987. This atomised view of the world has been the central tenet of the liberal establishment’s ethical guidebook ever since. In this moral universe, the individual is awarded a distinctly privileged status as the default unit of analysis.

But can we really disregard society? Let’s go back to humanity’s Biblical beginnings and find out. In Genesis 4, we are introduced to the first humans who were born: Cain and Abel.

And Cain talked with Abel his brother; and it came to pass, when they were in the field, that Cain rose up against Abel his brother and slew him. And the Lord said unto Cain, “Where is Abel thy brother?”. And he said, “I know not. Am I my brother’s keeper?”.

After Adam and Eve’s fall, the first thing mankind did was commit fratricide. Why? Because Cain desired the same thing Abel desired – God’s approval – but he could not have it for himself. When human civilisation starts out with murder, it is no wonder that Hobbes thought life was “solitary, poor, nasty, brutish and short”.

I.

This isn’t that surprising: rational humans look out for themselves. They do what it takes to improve their own circumstances, social consequences be damned. The state of nature is the world of Moloch: the chief of Satan’s angels in Paradise Lost.

All Moloch asks is that you sacrifice your values – in exchange, what he offers is whatever your heart desires. If you reject his deal, others will take advantage of this devil’s bargain and leave you in the dust. Natural selection will eliminate you. But if you accept his deal, you are left an empty husk devoid of care or consideration.

So while Moloch reigns, we are pitted against each other in this race to the bottom, robbed of the audacity to hope for greater things. Meanwhile, he devours all that is good, until there is nothing left.

Wait a second. What does Captain Picard say in the face of an all-consuming adversary?

The line must be drawn here! This far, no further!

That’s right. Look around! For the 300,000 years since the first homo sapiens emerged in Africa, we have drawn a line in the sand and said “no further”. At every turn, we have outwitted Moloch and avoided gradient descending into bad equilibria. By the sheer magic of human drive and ambition, we have built the most prosperous civilisations the world has ever seen. We have sent men to the moon and peered even further into the expanses of our universe.

How have we refused Moloch’s alluring offers? Or for that matter, how have you refused Moloch’s alluring offers? I wager it has something to do with the communities which we all participate in, which make us greater than the sum of our parts.

II.

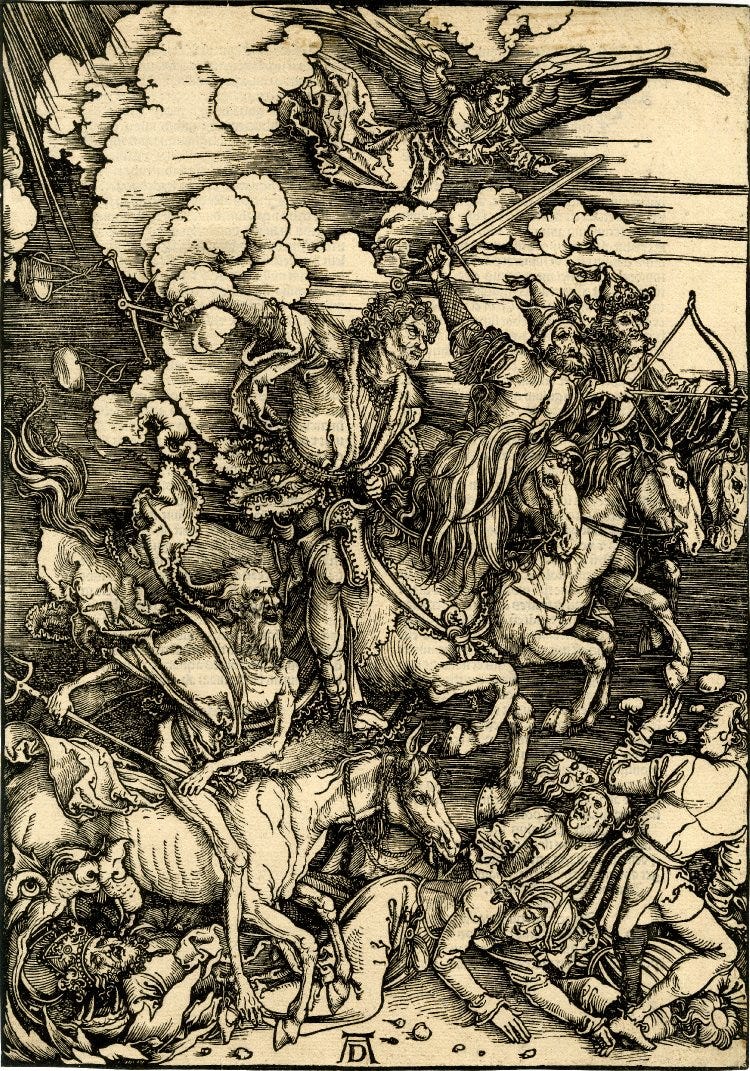

In Ezekiel 14, we are told these four horsemen of the apocalypse are:

The sword and the famine and the noisome beast and the pestilence.

Staving off these horsemen requires more than just individual ingenuity; it requires collectiveaction. And so as soon as mankind was born, tribes were formed too.

Sword: Suppose you and another person are going on an expedition. You usually use a sword while they use a spear. You will both be able to fight off dangers better if you use the same weapon, but you prefer to use your usual choice.

In game-theoretic terms, we would say that there are two pure strategy Nash equilibria1. These happen to be the optimal outcomes. But if you don’t know what the other person will do, you might end up randomising with a mixed strategy and could get the worse outcomes.

(So perhaps there’s a role for a tribe to coordinate here.)

Famine: Suppose you and another person have some land. You can each put one or two cows to graze on those pastures. If you each put one cow on the pasture, they graze and give you 1.5 units of food each. If one person puts two, the more intensive grazing means you only get 0.9 units of food per cow. And if you both put two cows, the pasture is ruined from overgrazing and no food is produced by the cows.

Because you want to place two cows if the other places one, we get two pure strategy Nash equilibria which give payoffs of (0.9, 1.8) or vice versa. We can’t get to (1.5 , 1.5), even though that would increase the total yield from the land, because we can’t credibly commit to not taking advantage of common resources.

(So perhaps there’s a role for the tribe to coordinate here.)

Noisome beast: Both you and another person would like to slay this beast. But you don’t know what the other is planning on doing, and it requires both of you to kill the beast. If only one of you goes for the beast while the other hunts hare, then the person who went for the beast will end up with nothing.

Again, there are two pure strategy Nash equilibria, of beast-beast or hare-hare. But there’s no assurance that you will end up at the better equilibrium.

(So perhaps there’s a role for the tribe to coordinate here.)

Pestilence: Faced with a pestilence, you and another person can choose to social distance or to mingle. If you both distance, everyone is benefitted by that. If one person social distances while the other person mingles, the latter benefits from the lower risk of infection without the hassle of distancing. But if both of you mingle, you will face a high risk of getting the disease.

Here, social distancing is a public good, but everyone has an incentive to free-ride off other people’s distancing. So we end up with one pure strategy Nash equilibrium that is worse than if you had both socially distanced.

(So perhaps there’s a role for the tribe to coordinate here.)

III.

What these four games2 all have in common is that they are game theoretic representations of the Edenic fall: rational human selfishness can lead to subpar equilibria. How can we avoid this?

The most obvious approach is to rely on top-down enforcement: to coordinate either sword or spear, to ensure only one cow per person is grazing, to get everyone hunting beasts instead of hare and to compel social distancing. But can we avoid this unnecessary consolidation of power? As it turns out, we can. We just need to make one small change.

The key assumption so far is that these are one-shot games. In reality, they are repeated games. You will interact with people over and over again. What the so-called folk theorems of game theory tell us is that this is A. Big. Fucking. Deal.

What it tells us is that basically any outcome can be supported as a Nash equilibrium, assuming that we play the games frequently enough without knowing which game will be the last. I’ll leave the proof for a game theory textbook, but the basic intuition is as follows: if we know we’re going to keep on running into each other in the future, I know you can punish my defection and make it costlier than not defecting over the long run.

This means that in many cases, the optimal outcome could be achieved. But the operative word is could. It may be supported by a Nash equilibrium, but it is by no means the only Nash equilibrium. So all iteration does for us is change the problem from conflict to coordination. And coordination isn’t as easy as you might think.

Consider the game of hunting the beast. This is a coordination game. If you send me an email saying you’re going to hunt the beast, does that we mean we’re now on the good equilibrium? No. It actually isn’t quite good enough, because you don’t know if I’ve seen your email. And since I know that you don’t know, I know you will stick with catching hare. Since you know I expect you to catch hare, you expect me to catch hare as well, so you will do so too.

Even if I am able to reply with an email, we still have the same problem. I don’t know if you’ve seen my reply. And so in spite of communication, this attempt at coordination has unravelled entirely. What we need is common knowledge, the epistemic condition where everyone knows the message, everyone knows that everyone knows, everyone knows that everyone knows that everyone knows ad infinitum.

IV.

Perhaps the coolest demonstration of common knowledge is the blue hat puzzle. Suppose you and your friend have blue hats on, but you each don’t know what colour your hat is. You can see the other person’s hat, but you can’t see your own and can’t communicate.

In front of both of you, I say that at least one of you has a blue hat. Then I ask whoever has a blue hat to raise their hand. Neither of you raise your hands. Then I ask again, and both of you raise your hands. What changed? And why did it only change on the second try?

Here’s what happened.

When I asked the first time, you already knew there was at least one blue hat. As did your friend. You could see it on each other’s heads. But me saying it in front of both of you meant that you now knew your friend knew this too and vice versa.

If you didn’t have a blue hat, they would have stood up on the first question, since they would have been able to deduce that they were the one with the blue hat. But they didn’t stand up. So when I asked the second time, both of you knew that you had to each have blue hats.

The trick is that even though everyone knew there was at least one blue hat, announcing it and making it common knowledge meaningfully changed your choices.

To return to our original problem, we don’t just need to have trust in the other person to cooperate. We need to have common knowledge of trust. Schelling points work because it is common knowledge they are Schelling points3.

To underline how important cooperative common knowledge is, we can look at how this varies across different societies. For example, researchers Jean Ensminger and Joseph Henrich have gotten people around the world to play the dictator game4 and see how they behave. We would expect homo economicus to take all the money and run. But in Experimenting with Social Norms, what they find instead is that no society exhibits purely selfish behaviour, and in more complex societies, people’s behaviours and norms tend to be more cooperative.

In an extension to this game, there is a third party who can punish the dictator at some personal cost. Again, they find that people are not purely selfish, and are willing to enforce fairness even if it is costly to themselves. And again, they find that this is more true in more complex societies. So these norms count.

V.

Okay, detour into game theory over. Let’s get to building trust and common knowledge.

These can evolve organically in smaller societies, simply via the repeated interactions with our neighbours. If you were a hunter of gatherer, it was pretty easy to verify if fellow tribe members could be trusted or not, because you saw them all the time. And this could scale pretty far.

Consider the Icelandic Commonwealth, which existed from the 10th to 13th century. It had no executive branch or overarching governmental structure. Although courts existed, they were only there to arbitrate private disputes and did not directly enforce the law. And yet over 30,000 people – all of whom were organised into smaller tribes – co-existed and cooperated for over 300 years, producing public goods and keeping the peace5. They relied on the common knowledge of reciprocal behaviour to produce these cooperative outcomes.

Now to be clear, this did eventually break down. It broke down because chieftains were able to gradually consolidate control and centralise power. In these larger groups, you had to interact with more people and interacted with any particular person less. As we interact with more people than Dunbar’s number6 (give or take an order of magnitude), it becomes harder for us to keep track of people’s individual societal standings and punish defection. Thus informal norms enforced by reputation and community mediation do fall apart, as they did in Iceland.

Today, most of us live in communities far larger than the goðar7 could ever have imagined. Most people in our towns and cities are strangers to us, let alone the many people beyond national borders who we communicate and trade with. How do these groups stick together? What came after the tribes of the Icelandic Commonwealth?

That’s what we’ll find out next time: how we can scale up trust!

A Nash equilibrium is an outcome where all the players are choosing their best response to the other players i.e. no one wants to individually deviate from this outcome. In a pure strategy, players pick a certain response to every situation. In a mixed strategy, players assign probabilities to each pure strategy.in this game: sword-sword and spear-spear.

In the literature, these four games are known respectively as the battle of the sexes game, the chicken game, the stag hunt game and the prisoners’ dilemma.

A Schelling point is a decision that is so salient and obvious that everyone knows to default to it, even when they can’t communicate.

In the dictator game, there are two people. One person is given some amount of money. They get to dictate how much of the money is given to the other person and how much they keep.

Dunbar’s number is the idea that on average, the human neocortex can only keep up with ~150 stable and meaningful social relationships that have a reasonable degree of trust and obligation.

The goðar were the chieftains of medieval Iceland.